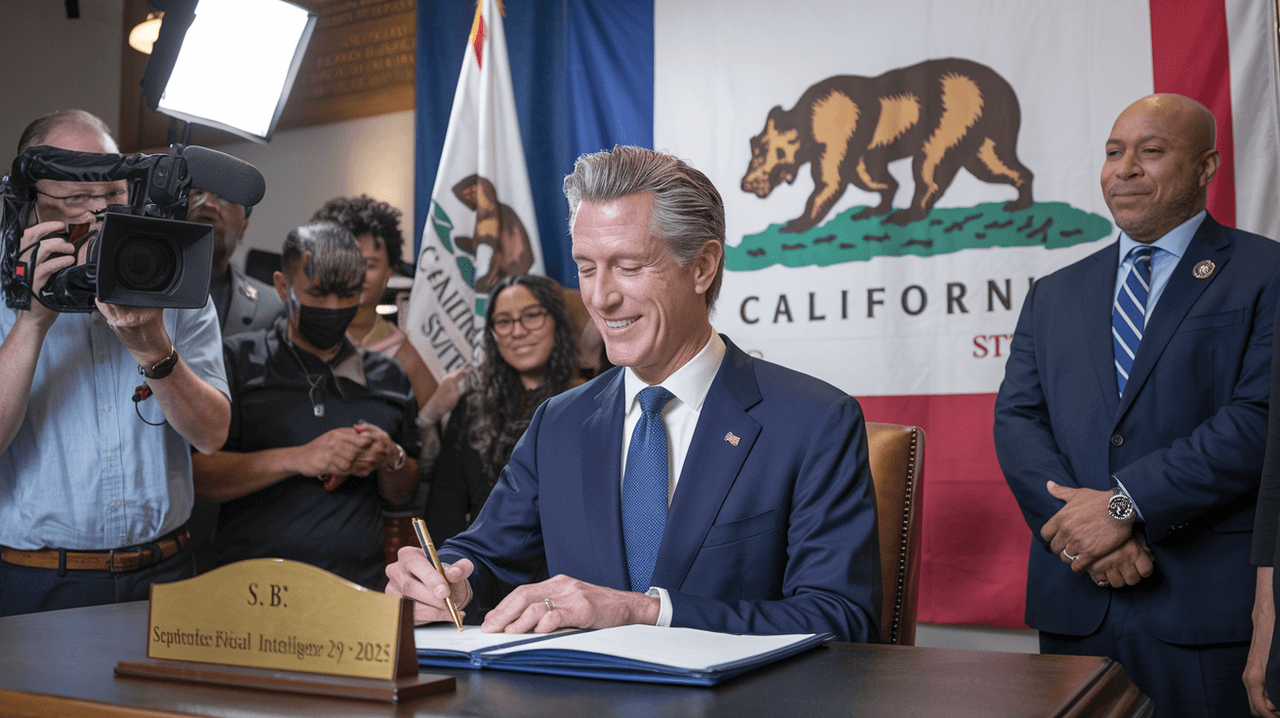

About a year ago, a friend of mine who works at a tech startup told me, half-jokingly, "When AI takes over, I just hope it remembers which snacks I like." Now, fast-forward to September 29, 2025: California isn't just prepping the pantry for our digital overlords; it's setting strict ground rules before letting them in the door. Governor Gavin Newsom’s signature on the Transparency in Frontier Artificial Intelligence Act—SB 53—marks not just a legal milestone, but the first time I've seen engineers, lawyers, and activists glued to the same press conference. Let’s get personal about what this means for you, your tech, and our collective future.

California's Bold Step: Why Now, and What’s at Stake for AI?

On September 29, 2025, California made history when Governor Gavin Newsom signed S.B. 53, the Transparency in Frontier Artificial Intelligence Act, into law. This move marks a turning point for California AI regulations 2025, signaling the state’s determination to fill the regulatory void left by federal inaction. As artificial intelligence rapidly transforms industries and daily life, California is stepping up to ensure that innovation does not come at the expense of safety and public trust.

Why Now? The Urgency Behind SB 53

For years, tech policy was rarely a topic outside of industry circles. Now, it’s a regular subject at dinner tables and in the headlines. The explosive growth of AI systems—think chatbots, AI influencers, and open-source platforms—has raised new questions about safety, ethics, and accountability. Yet, while the technology advanced, regulations lagged behind. SB 53, led by Senator Scott Wiener, directly addresses this gap, making California the first state to impose clear transparency and reporting requirements on advanced AI companies.

Senator Wiener summed up the state’s proactive stance:

"California has long been the vanguard of tech. SB 53 ensures we're also the guardians."

What’s at Stake: Accountability and Public Trust

With the Gavin Newsom AI legislation, California is telling AI developers: no more ‘just trust us.’ Under the new law, companies building the most advanced AI systems must:

- Regularly report on the safety protocols they use during development

- Disclose the greatest risks their AI systems could pose to society

- Strengthen whistleblower protections for employees who raise ethical or safety concerns

These measures are not just about compliance—they are about building public trust in a technology that is increasingly shaping how we live and work. By requiring companies to self-report on risks and safety, California is demanding a new level of transparency and responsibility from the tech sector.

California’s Leadership in AI Best Practices

The passage of SB 53 is more than just a state initiative; it’s a template for future regulation nationwide. As the first law of its kind, the Transparency in Frontier Artificial Intelligence Act sets a regulatory framework that other states—and perhaps even federal lawmakers—will look to as AI governance evolves.

California’s leadership in AI best practices is clear. The state is not only home to many of the world’s leading AI companies, but it is also now at the forefront of shaping how these technologies are governed. The law’s focus on transparency, accountability, and whistleblower protection reflects a broader societal demand for responsible innovation.

As reported by The New York Times, this legislative milestone comes at a time when AI’s influence is expanding into finance, media, and everyday decision-making. The stakes are high: how we regulate AI today will shape the technology’s impact for years to come.

Transparency & Safety: Demanding More from AI Companies

On September 29, 2025, California set a new standard for transparency requirements for AI companies with the passage of the Transparency in Frontier Artificial Intelligence Act (SB 53). If you’re following the evolution of AI regulation, you’ll notice that this law directly targets the world’s largest AI companies—think OpenAI, Google DeepMind, Meta, and Anthropic—by demanding a new level of openness and accountability.

Public Reporting: Shedding Light on AI Safety Protocols

Under SB 53, AI safety protocols reporting requirements are no longer optional or kept behind closed doors. Major AI firms must now submit regular, detailed reports to California’s Office of Emergency Services. These reports must outline:

- The specific safety measures in place during the development and deployment of their AI systems

- Comprehensive disclosures of the most significant risks their technologies could pose

- Any critical safety incidents, including crimes or harms committed by AI systems without human oversight

These AI accountability and transparency measures are designed to keep not only regulators but also the public informed about the real-world impact of advanced AI. As you sit with friends at a café debating the future of technology, you might wonder: Is this the end of ‘black box’ AI?

Foundation Models Under the Microscope

SB 53 specifically addresses foundation models—those powerful AI systems trained on vast, general datasets and capable of a wide range of tasks. These models, which underpin everything from chatbots to content generators, are now subject to the strictest large AI companies transparency rules in the country. Companies must provide annual updates and immediate reports when significant safety incidents occur, ensuring ongoing oversight.

Incident-Based Reporting: No More Secrets

Transparency doesn’t stop at annual reports. If an AI system is involved in a critical safety incident—such as generating harmful content or enabling a crime—companies are required to notify the Office of Emergency Services promptly. This reporting of critical safety incidents is a direct response to public concerns about AI systems acting without human intervention.

Whistleblower Protections: Empowering Industry Insiders

Another key feature of SB 53 is its reinforcement of whistleblower protections. If you work in the AI sector and witness ethical or safety issues, the law now offers stronger safeguards should you choose to speak out. This is intended to foster a culture where employees feel secure in reporting problems, further supporting AI accountability and transparency measures.

"Transparency is the foundation of public trust in AI." – Cecilia Kang

Industry Response: A Divided Field

The law has sparked debate across the tech industry. Anthropic, a leading AI company, supported SB 53, while OpenAI and Meta lobbied against it, citing concerns over regulatory burden. Still, the new requirements are now a reality for any company building advanced AI in California.

As you watch these developments unfold, it’s clear that California’s bold approach is reshaping how AI companies operate, making transparency and safety non-negotiable priorities.

Opening the Doors: Whistleblowers, Patchwork Politics, and New Norms

With the signing of California’s SB 53, the Transparency in Frontier Artificial Intelligence Act, you are witnessing a transformation in how the AI industry is held accountable. This law is not just about technical compliance—it’s about empowering people inside the industry to speak up, and about setting new expectations for how AI companies operate under a web of evolving state regulations.

Whistleblower Protections in the AI Industry: A New Era

One of the most significant changes brought by SB 53 is the strengthening of whistleblower protections in the AI industry. If you’re an engineer, researcher, or employee at an AI company, the law now gives you more security if you notice something dangerous or unethical. Reporting concerns about risky AI systems is less likely to put your career in jeopardy. As Senator Scott Wiener, the bill’s author, put it:

“Protecting those who speak out is how we build safer tech.”

These robust protections are designed to foster a responsible reporting environment. Imagine a future where an AI engineer posts a TikTok video warning about a rogue algorithm. Thanks to SB 53, that whistleblower has the legal backing of California, making it safer to alert the public and regulators about potential harms.

Patchwork Politics: The Regulatory Turf War

The impact of SB 53 on AI companies goes far beyond California’s borders. The law’s requirements for safety reports and risk disclosures are already intensifying the ongoing debate over who gets to set the rules for AI. Some tech firms warn that a “patchwork of regulations” across states could make compliance complex and costly. Others, like Anthropic, have voiced support, seeing the law as a step toward responsible innovation.

- AI industry compliance with new laws is now a moving target, as companies must adapt to California’s standards while watching for similar moves in other states.

- Pro-AI super PACs have emerged in response to SB 53, signaling that the political battle over AI regulation is just heating up.

For the AI sector, this means navigating not just technical challenges, but also a rapidly shifting legal and political landscape. The law’s adoption is likely to impact regulatory discussion and policy beyond California, as other states consider whether to follow suit or chart their own course.

New Norms: Transparency, Reporting, and Industry Culture

SB 53 is setting trends in AI technology regulation by normalizing transparency and proactive risk management. AI companies must now regularly disclose their safety protocols and the greatest risks posed by their systems. This move is expected to influence industry culture, making open discussion of risks and ethical concerns a standard part of AI development.

With AI whistleblower protections codified in law and a growing patchwork of state-level rules, the industry faces a new normal—one where accountability, transparency, and ethical reporting are not just encouraged, but required. As political groups and tech firms react in unexpected ways, California’s leadership is pushing the entire field toward higher standards and greater public trust.

Conclusion: The Law’s Real Test Will Be Cultural, Not Just Legal

As you reflect on California’s new regulatory framework for artificial intelligence, it’s clear that S.B. 53 is more than just another law on the books—it’s a signal flare for the entire tech industry. The Transparency in Frontier Artificial Intelligence Act sets some of the toughest California AI governance standards yet, demanding that companies not only disclose their safety protocols but also confront the real-world AI risks and societal impact of their creations. But while the legal requirements are now in place, the true test for this legislation will be cultural, not just legal.

California’s bold move could inspire a wave of similar laws across other tech-centric states. As debates over ethical concerns in artificial intelligence intensify, S.B. 53 marks a turning point in the national conversation about AI ethics and regulatory norms. The law’s influence is likely to spread far beyond state lines, especially as policymakers and industry leaders across the country watch to see how these new rules play out in practice.

Yet, the ultimate effectiveness of S.B. 53 will depend on more than compliance checklists or legal disclosures. It will hinge on whether both the public and the tech industry embrace a culture of transparency and accountability. Will companies see these new standards as a foundation for responsible AI innovation, or merely as hurdles to clear? Will consumers and employees feel empowered to speak up about AI risks and ethical concerns, or will old habits of secrecy and self-regulation persist? As Cecilia Kang of The New York Times aptly put it,

'Regulation isn’t the enemy of innovation; sometimes it’s just the seatbelt.'

The passage of S.B. 53 sets a precedent, but its long-term impact will be shaped by how people—inside and outside the industry—respond to the call for openness. If California’s push for greater AI transparency and accountability is met with genuine cultural change, it could help build public trust and set new norms for ethical AI development. On the other hand, if the law is seen as just another box to tick, its promise may fall short.

As you consider the future of AI governance, remember that the law is only the first step. The real challenge lies in shifting attitudes and expectations—within boardrooms, among engineers, and across society. Will cultural attitudes about AI transparency shift? Time (and a few more bill signings) will tell. And if future AI ethics talks start to sound more like family feuds at Thanksgiving, don’t say California didn’t warn us.

In the end, S.B. 53’s legacy will be written not just in legal language, but in the everyday choices and conversations that shape how artificial intelligence is built, used, and trusted. California may have set the standard, but it’s up to all of us to decide what comes next.